The current landscape, dominated by NISQ devices, operates under an inherent and limiting constraint: an unfavorable trade-off between circuit depth and fidelity. While physical qubit fidelity has steadily improved, achieving the 10−15 to 10−18 logical error rates required for useful computations is simply intractable via physical engineering alone. This scientific challenge is precisely where Quantum Error Correction (QEC) steps in, acting as the indispensable digital coach for the quantum system. We celebrate the progress in qubit count and connectivity, but the next true breakthrough lies in implementing QEC protocols, such as the Surface Code, which leverage entanglement to redundantly encode a single logical qubit across multiple physical qubits [Ref. 1]. This paradigm shift, from simply building more qubits to building reliable logical qubits, defines the transition to Fault-Tolerant Quantum Computing (FTQC).

Turning noise into power

Every quantum processor lives under constant assault from its own environment. Stray photons, flux noise, and charge fluctuations conspire together to randomize fragile quantum states. Quantum error correction (QEC) transforms that same noise from a destructive force into a measurable and correctable signal, turning noise into information, and ultimately, into power.

At its core, QEC transforms unreliable physical systems into highly reliable logical units. Fundamentally, QEC encodes a single logical qubit into a highly entangled state across multiple physical qubits, safeguarding information not by preventing errors, but by ensuring errors on individual qubits do not corrupt the logical state. Stabilizers are measured repeatedly to reveal syndromes, which indicate the presence and nature of errors without disturbing the encoded data. Decoders then analyze these syndromes to make their best guess about the error pattern and apply the necessary corrections to recover the logical state.

This continuous cycle of detection, decoding, and correction forms the foundation of fault-tolerant quantum computation.

Where we are now

- Superconducting qubits: Google first demonstrated the critical principle of quantum error correction by achieving an exponential reduction in error rate as the qubit count scaled on their Willow chip. Complementing this, IBM’s multi-round subsystem-code experiments established matching and maximum-likelihood decoders across repeated cycles—a foundational proof that active QEC can track evolving error syndromes. [Ref. 2]

- Theory and qLDPC progress: IBM’s later paper introduced LDPC codes achieving high thresholds (~0.7%) and low circuit depth, with hardware-compatible connectivity graphs. These codes promise reduced overhead for future logical architectures. [Ref. 2]

- Trapped ions and neutral atoms: Recent experiments demonstrate encoded logical teleportation and single-cycle QEC routines, confirming cross-platform viability. Neutral-atom devices show the first capabilities to sustain multiple cycles, highlighting the importance of fast, high-fidelity readout and reset. [Ref. 3] [Ref. 4]

The scaling cost

Milestones like Willow are pivotal in the journey toward fault-tolerant quantum computing (FTQC)

For now, achieving a sufficiently low logical error rate demands the resource cost of 100 to 1,000 physical qubits per single logical qubit. The physical qubits need to be good enough, i.e., “below threshold”. Actually, in the long run, at least a factor of 10 below the threshold for practical purposes. The threshold is the noise strength below which QEC helps; in particular, making bigger logical qubits gives an (exponentially) lower error rate. To clarify this distinction:

- Pseudo-Threshold: The point where the logical error rate (pL) drops below the physical error rate (p), confirming the code is beneficial at a fixed size.

- Critical Threshold (pth): The fundamental noise limit below which increasing the code distance (d) yields an exponential suppression of pL. This is the only true measure of QEC scalability.

This enormous scaling factor drives requirements for larger chip footprints, deeper cryogenic infrastructure, and highly complex control stacks.

The lesson is consistent across theory and experiment: scaling quantum computers is not about silencing noise — it’s about learning to use it.

Google's Milestone

Google’s introduction of the Willow quantum chip marks the definitive experimental transition into the fault-tolerant regime. For the first time, researchers have simultaneously achieved two crucial QEC benchmarks: operating below the pseudo-threshold and, more importantly, below the critical error threshold. [Ref. 5]

The team demonstrated this below-threshold regime by implementing the Surface Code across a series of growing qubit arrays, from 3×3 up to 7×7. As the code distance increased, the error rate was systematically suppressed, showing a 2.14-fold reduction with each stage of scaling. This experiment is a major milestone, validating the decades-old theoretical promise of quantum error correction codes. Willow's performance is a direct result of relentless focus on qubit quality and the successful integration of all recent hardware and control breakthroughs necessary to stabilize such a large logical qubit.

Willow successfully executed a standard benchmark calculation in under five minutes—a task estimated to require 1022 years for the fastest classical supercomputers, effectively establishing a new bar for quantum utility.

The real-time decoding challenge

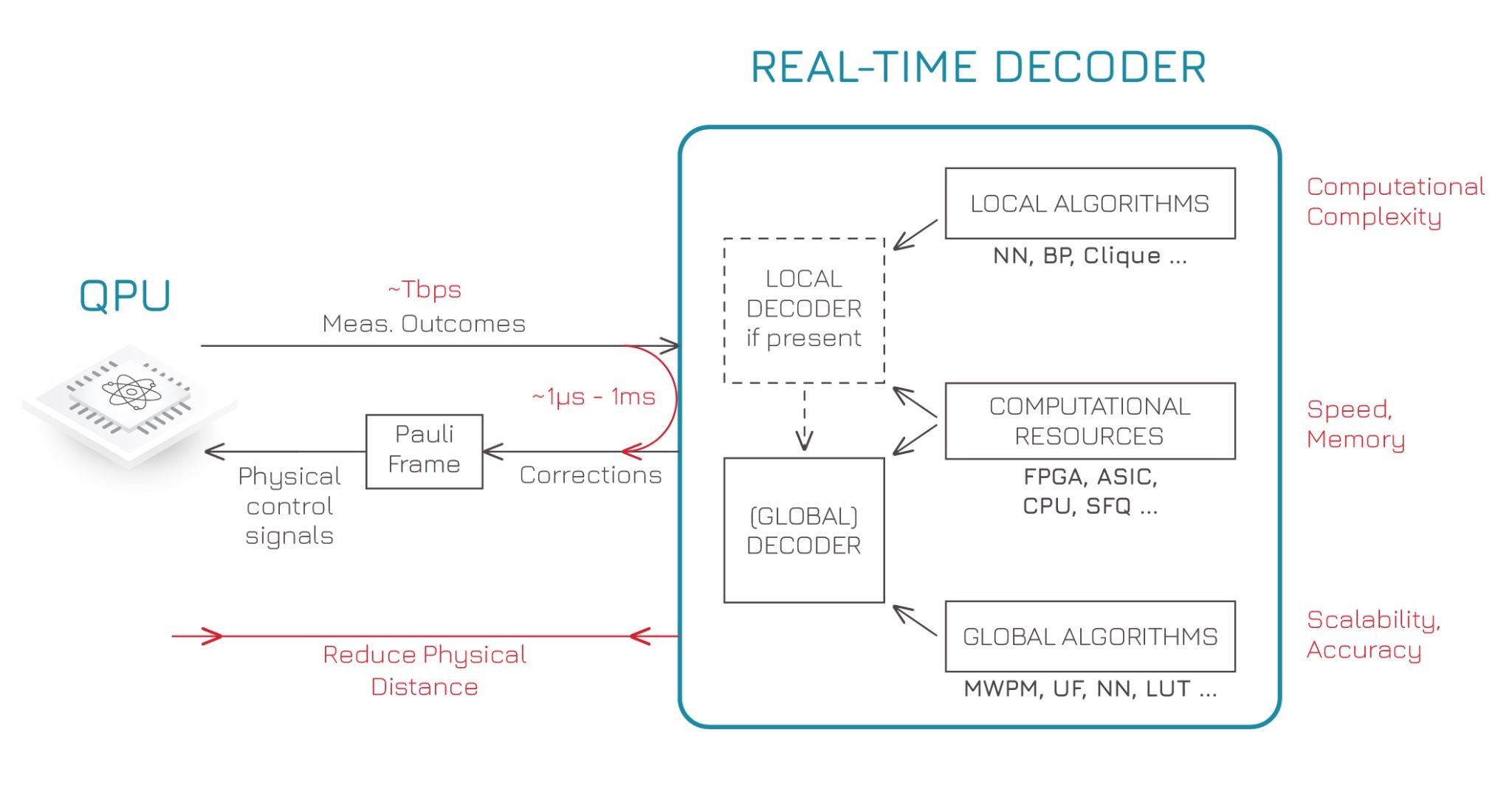

In a fault-tolerant quantum computer, stabilizer measurements generate a continuous stream of binary data. Detecting an error is one thing; correcting it before the next non-Clifford gate happens is another. That’s the crux of real-time decoding.

Two fronts of difficulty

According to Francesco, our Roadmap leader, and colleagues, the problem splits cleanly into two domains:

- Data communication: getting the measurement bits out of the cryostat and through the control stack fast enough. That’s where our group’s engineering focus lies — minimizing signal delay, synchronizing clock domains, and ensuring deterministic latency.

- Decoding algorithms: interpreting the bits on time. That’s where collaborators like Riverlane come in, designing hardware decoders that can process thousands of syndromes every microsecond.

Latency ≠ Throughput

It’s worth stating clearly: latency is the time it takes for one message to get through; throughput is how many you can handle per second. A system can have huge throughput and still fail in fault tolerance if the latency is too high. For feedback-based QEC, low latency is essential.

Low latency enables real-time feedback; high throughput ensures scalability across many logical qubits. Both are required, but latency sets the critical limit.

Feedback-ready control stacks

Control stacks are starting to close the loop — combining high-speed readout, FPGA-based filtering, and real-time decoders that feed corrections back into the pulse scheduler. Figure 3 of our paper sketches this hierarchy.

The onus on the control stack is to minimize latency by executing the syndrome extraction, transmission, decoding, and correction feedback loop with hard real-time guarantees. This necessitates specialized hardware—often FPGAs or custom ASIC architectures—for both data routing and algorithm execution. Current research, as detailed in recent reviews, summarizes avenues for improvement ranging from faster algorithms (e.g., optimized minimum-weight perfect matching) to co-design strategies that deeply integrate the control hardware with the QEC code.

How Qblox enables quantum error correction today

Enabling QEC without real-time decoding

Today, we already power QEC-compatible experiments even without full real-time feedback. Our modular control stack scales to hundreds of qubits, a practical necessity given the 100–1000× overhead per logical qubit.

Equally important, our signal chain adds negligible noise. That means the control electronics don’t inflate physical error rates—preserving the "below-threshold" operating regime that determines whether logical error suppression is even possible.

Low-latency feedback and communication

Qblox’s modular architecture includes a deterministic feedback network capable of sharing measurement outcomes within ≈ 400 ns across modules.

This provides the latency budget necessary for fast feedback, active reset, and inter-module coordination during repeated QEC rounds.

Integration with real-time decoders

The next step is coupling this feedback layer directly to FPGA-based, GPU-based, or hybrid decoders. By embedding decoding within the same latency domain as the control electronics, we can satisfy both throughput and latency requirements for superconducting-qubit systems — the most demanding case today — including support for open interfacing such as QECi [Ref. 6] or as part of NVIDIA NVQLink [Ref. 7]

Through this approach, Qblox supplies the scalable, noise-minimal, and feedback-ready infrastructure required to bring quantum error correction from demonstration to real-time operation.

Scaling to the fault-tolerant future

Reaching FTQC requires excellence across the entire stack: high-quality fabrication, scalable qubit arrays, optimized gate operations, low-noise analog electronics, and tightly integrated measurement and feedback. Each component contributes directly to whether logical qubits outperform their physical constituents.

The field has seen rapid progress in:

- High-threshold, low-overhead QEC codes capable of supporting larger logical qubits

- Logical gate implementations and lattice surgery, enabling scalable, modular operations

- Magic-state distillation/cultivation, providing the non-Clifford resources for universal computation (currently demonstrated primarily in theoretical and simulated models)

- Real-time logical memories that survive multiple cycles

- Real-time decoding feeds corrections directly back into the qubit control loops

These advances collectively demonstrate that FTQC is technically achievable within the next generation of quantum experiments.

Qblox provides the scalable control backbone required to realize these capabilities:

- Analog performance at scale: maintaining low noise and precise control across hundreds of qubits

- High-throughput, low-latency communication: ensuring timely delivery of stabilizer measurements for feedback and correction

- Decoder integration: linking measurement outcomes to real-time decoding pipelines hosted on classical hardware

- Experiment orchestration: coordinating complex sequences of logical operations, lattice surgery, and multi-qubit experiments

Together, this infrastructure allows labs to scale experiments from single logical qubits to larger, fault-tolerant architectures, bridging the gap from demonstration to practical FTQC.

QEC perspectives from Qblox

“Quantum Error Correction (QEC) is essential to achieve quantum utility. However, real-time QEC is extremely challenging. Qblox enables real-time QEC at scale by providing a unique combination of a low-latency communication network, together with logical orchestration and high-throughput interfacing to real-time decoders. Qblox supports a wide spectrum of decoder and QEC codes, including surface codes and qLDPC codes, thanks to the interconnectivity of Qblox’s control stack.”

Francesco Battistel

Roadmap Leader at Qblox

.svg)

.avif)